![]() Members : Sungheum Kim, Jiyoung Jung

Members : Sungheum Kim, Jiyoung Jung

![]() Associated center :

Associated center :

Electronics and Telecommunications Research Institute (ETRI),

Gwangju Institute of Science and Technology (GIST)

Overview

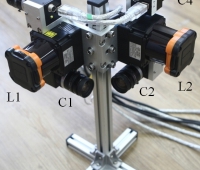

1. Aim : Foreground / Background Segmentation in a Multi-view Camera System

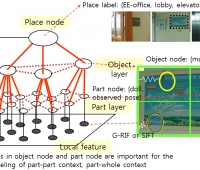

The project assigned to KAIST develops an algorithm for ex-tracting interest objects from natural, dynamic scenes taken by movable PYZ cameras. About 40 digital-controlled cameras distribute on a hemispherical stadium, and focus on players. The ideal algorithm computes exact foreground masks for each view in real-time.

2. Background : Pre-processing for Reconstructing, Rendering, and Editing 3D Video Objects

Recently, the needs for creating, or manipulating 3D-related contents are growing. The project start with this context where the current broadcasting system should be extended for a new type of visual impact in the next generation.

One of essential techiques is multi-view object extraction in more general, challenging situations.

It is not only becuase this step provides initial model for 3D reconstruction from multiple views, but also

it is important to edit 3D video objects and further manipulate virtual replays. However the general solution for this problem itself is mathematically ill-posed, which brings many difficulties to overcome.

3. Contributions

We have begun with strict conditions, and go on solving more general problems, from static background to dynamic background, from a single object to multiple objects, and from indoor background scenes to outdoor natural scenes. The following ideas contribute to satisfy the specific goals required for each year.

1st Year Goal: Segmenting foreground objects from static backgrounds

: FINISHED

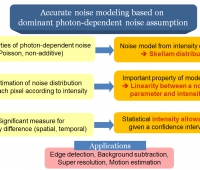

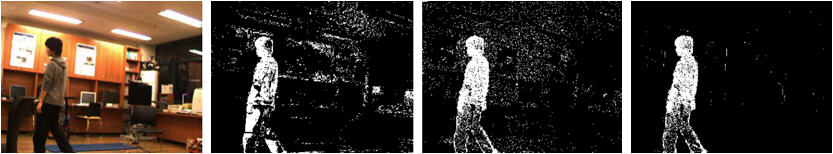

1) Background Subtraction : It is widely used, and particularly useful for this condition. Typically, the results highly depends on the maintenance of the correct background model. In this work, we propose the modified kernel density estimation and handle abrupt changes of global intensity and several satuated pixels.

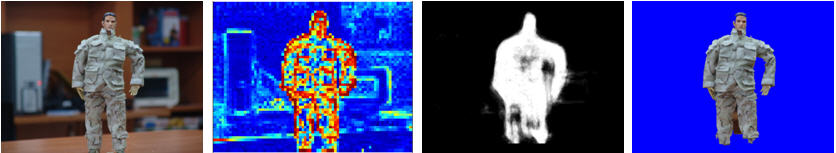

Input MoG KDE The Proposed Method

[1] Kapje Sung, Youngbae Hwang, In-So Kweon, "Robust Background Maintenance for Dynamic Scenes with Global Intensity Level Change", 5th International Conference on Ubiquitous Robots and Ambient Intelligence(URAI), 2008

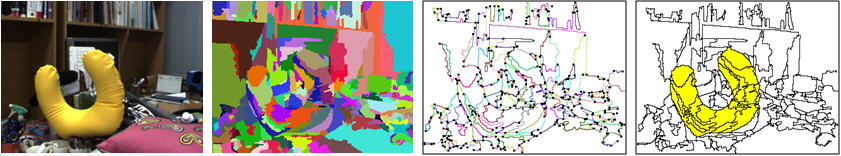

2) Boundary Matching : Boundary shape is one of the useful high-level shape information. We treat the boundary matching problem as finding the optimal cycle in the graph, and consider every possible correspondence between the sub-parts of templates and the object boundaries using the product graph concept. The proposed algorithm can cover even deformable objects with a few templates under complex background clutters

Input Over-segmentation Product Graph Optimal Segments

[2] Hanbyul Joo, Yekeun Jeong, Olivier Duchenne, Seong-Young Ko, In So Kweon , "Graph-Based Robust Shape Matching for Robotic Application", IEEE International Conference on Robotics and Automation, 2009.

[3] Hanbyul Joo, Yekeun Jeong, In-So Kweon, "Bottom-Up Segmentation Based Robust Shape Matching in the Presence of Clutter and Occlusion", International Workshop on Advanced Image Technology(IWAIT), 2009.

[4] Hanbyul Joo, Yekeun Jeong, In-So Kweon, "Graph Generation from Over-segmentation for Graph Based Approaches", 15th Korea-Japan Joint Workshop on Frontiers of Computer Vision(FCV), 2009.

Hanbyul Joo, "Graph-based Boundary Matching for Deformable Objects," Master thesis

3) Blur as a Visual Cue : When taking an image, we usually focus on a foreground object and background region becomes blurred. In this condition, we combine blur, spatial gradientand motion information to extract foreground pixels. Especially for a relative blur analyized by two views taken at different focal planes, the reasonable region is predicted better by shape-filter-based feature integration and by random-forests-based classification. The final result is optimized with color smoothness constraint.

Input Blur Feature Prediction Graphcut

[5] Kapje Sung, Sungheum Kim, In-So Kweon, "Foreground Extraction Using Blur Information", 15th Korea-Japan Joint Workshop on Frontiers of Computer Vision(FCV), 2009.

Kapje Sung, "A Probabilistic-Model based Approach for Foreground Extraction using Multi-Feature Integration," Master thesis.

2nd Year Goal: Segmenting foreground objects from dynamic backgrounds

: ON-GOING

4) Synthetic Aperture System : A blurry background can be effectively generated by summing up rays captured by translated views. Using the magnified, synthetic blur due to aligned images, the method optimizes the initial cost and segments the interest region from its background plane. In the aligning step, we use oriented gradient images as a similarity measure. Compared to measuring color intensities directly, aligning gradient directions produces better performance in several aspects. The proposed algorithm is applicable for a system with many sub-cameras or a moving, sigle camera.

A Linear Guide One of inputs(1/8) Synthetic blur Beilf Propagation

[6] Sungheum Kim, Kapje Sung, In-So Kweon, "Extraction of a Focused Object", 21th Image Processing and Image Understanding Workshop(IPIU), 2009

[7] Sungheum Kim, Kapje Sung, In-So Kweon, "Object Extraction using Blur Magnification and Analysis", 6th International Conference on Ubiquitous Robots and Ambient Intelligence(URAI), 2009

Last updated Oct. 14, 2009.