|

1. Purpose of project.

Developing self-localization technology for mobile surveillance robot.

To track and follow people or objects, mobile robot should have self-localization and self-driving related algorithms. In this research, we propose a sensor-fusion based self-localization system that can be applied to intelligence robots on outside conditions. In outdoors, usually vision based localization approach suffer from many obstacles; abrupt luminance changes, ambiguous scene images and motion blur (Fig 1, 2).

< Fig 1. Abrupt Illumination changes >

< Fig 2. limitations of vision based approach - ambiguous scene images and motion blur >

To solve this problem, the sensor-fusion system proposed. The system composed of LRF (Laser Range Finder), multiple cameras and DGPS (Differential Global Positioning System) can be applied to position estimation process. Therefore, even in the bad weather or night condition, the robot can estimate its location correctly.

2. Self-localization techniques.

We have been developed several techniques to achieve our goal. (Fig 3, 4, 5)

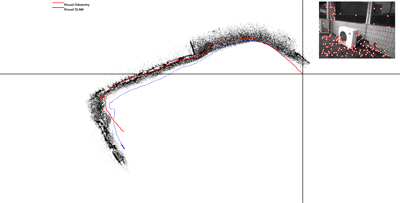

< Fig 3. Stereo camera based real-time localization technique >

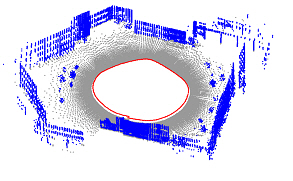

< Fig 4. Single camera based localization algorithm >

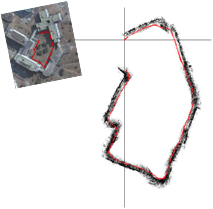

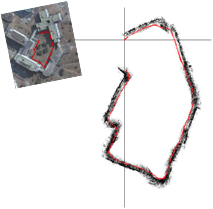

< Fig 5. Camera-Laser fusion system for estimating location >

|

3. Sensor fusion system.

If local information is gathered by camera or laser sensors, the error can be accumulated because these sensors do not have global perspective. The sensor-fusion algorithm can solve this problem. To be specific, using the local sensors such as camera or laser, we can estimate mobile robot’s motion and direction. Additionally, the global sensors, DGPS can gather accurate location information. Therefore, overall performance can be improved when we use those data alternatively.

|

|

MTMMC: A Large-Scale Real-World Multi-Modal Camera Tracking Benchmark

MTMMC: A Large-Scale Real-World Multi-Modal Camera Tracking Benchmark

Visual Perception for Autonomous Driving in Day and Night

Visual Perception for Autonomous Driving in Day and Night

Megacity Modeling

Megacity Modeling

Intelligent Robot Vision System Research Center (Localization technology developm...

Intelligent Robot Vision System Research Center (Localization technology developm...

i3D: Interactive Full 3D Service (Foreground/Background Segmentation Part)

i3D: Interactive Full 3D Service (Foreground/Background Segmentation Part)

National Research Lab: Development of Robust Vision Technology for Object Recogni...

National Research Lab: Development of Robust Vision Technology for Object Recogni...

Intelligent Robot Vision System Research Center (Detection Tracking Research Team)

Intelligent Robot Vision System Research Center (Detection Tracking Research Team)